What Does It Mean When My 2011 Armada /can't Change Gears

What does RMSE really mean?

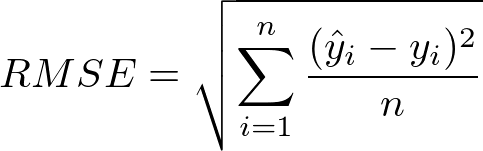

Root Mean Foursquare Error (RMSE) is a standard way to measure out the error of a model in predicting quantitative data. Formally it is divers as follows:

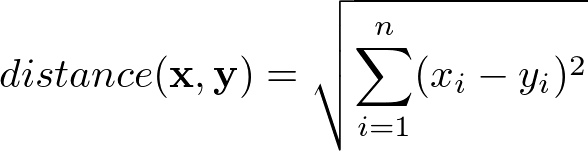

Allow's try to explore why this mensurate of error makes sense from a mathematical perspective. Ignoring the division past due north under the square root, the starting time thing we can notice is a resemblance to the formula for the Euclidean altitude between two vectors in ℝⁿ:

This tells us heuristically that RMSE tin can be thought of equally some kind of (normalized) distance between the vector of predicted values and the vector of observed values.

Simply why are nosotros dividing by n under the square root here? If nosotros continue n (the number of observations) fixed, all it does is rescale the Euclidean distance by a factor of √(1/n). Information technology's a bit tricky to see why this is the right matter to do, so let's delve in a bit deeper.

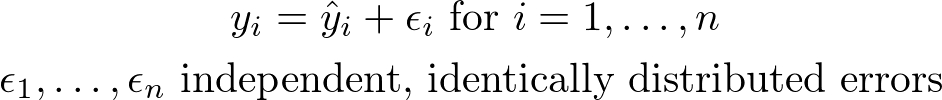

Imagine that our observed values are adamant by adding random "errors" to each of the predicted values, as follows:

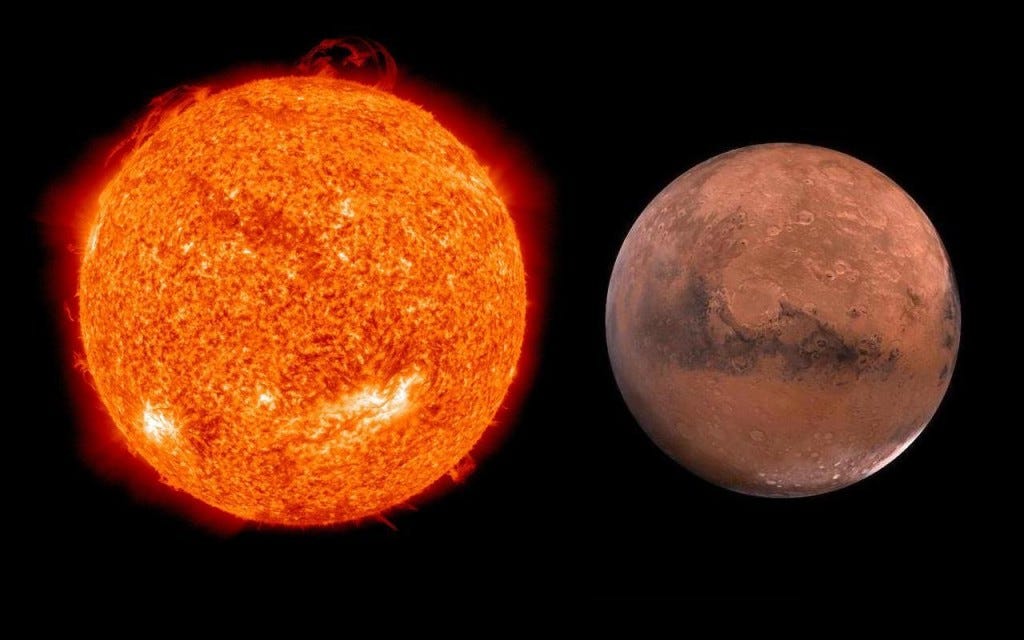

These errors, thought of equally random variables, might take Gaussian distribution with mean μ and standard deviation σ, but any other distribution with a foursquare-integrable PDF (probability density office) would also work. We want to think of ŷᵢ as an underlying physical quantity, such as the exact distance from Mars to the Sun at a detail bespeak in fourth dimension. Our observed quantity yᵢ would and then exist the altitude from Mars to the Sun as we measure out it, with some errors coming from mis-calibration of our telescopes and measurement racket from atmospheric interference.

The mean μ of the distribution of our errors would stand for to a persistent bias coming from mis-calibration, while the standard deviation σ would correspond to the corporeality of measurement noise. Imagine now that we know the mean μ of the distribution for our errors exactly and would like to estimate the standard difference σ. We tin can meet through a bit of calculation that:

Here East[…] is the expectation, and Var(…) is the variance. We can replace the average of the expectations East[εᵢ²] on the 3rd line with the Due east[ε²] on the fourth line where ε is a variable with the aforementioned distribution every bit each of the εᵢ, because the errors εᵢ are identically distributed, and thus their squares all have the aforementioned expectation.

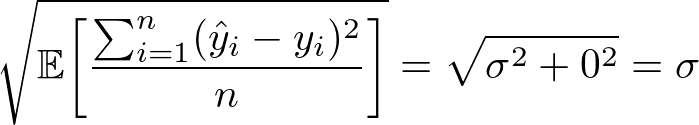

Remember that we assumed we already knew μ exactly. That is, the persistent bias in our instruments is a known bias, rather than an unknown bias. So we might equally well correct for this bias right off the bat by subtracting μ from all our raw observations. That is, nosotros might every bit well suppose our errors are already distributed with mean μ = 0. Plugging this into the equation above and taking the foursquare root of both sides then yields:

Notice the left paw side looks familiar! If we removed the expectation Eastward[ … ] from inside the square root, it is exactly our formula for RMSE form before. The primal limit theorem tells us that as northward gets larger, the variance of the quantity Σᵢ (ŷᵢ — yᵢ)² / northward = Σᵢ (εᵢ)² / n should converge to zero. In fact a sharper course of the central limit theorem tell the states its variance should converge to 0 asymptotically like 1/north. This tells us that Σᵢ (ŷᵢ — yᵢ)² / n is a good computer for E[Σᵢ (ŷᵢ — yᵢ)² / northward] = σ². But then RMSE is a good estimator for the standard departure σ of the distribution of our errors!

We should as well at present have an explanation for the sectionalization past n under the square root in RMSE: information technology allows united states of america to gauge the standard deviation σ of the error for a typical single ascertainment rather than some kind of "full error". Past dividing past n, we go along this measure out of error consequent as we move from a minor collection of observations to a larger drove (it just becomes more accurate as nosotros increase the number of observations). To phrase it some other manner, RMSE is a good style to reply the question: "How far off should we wait our model to be on its adjacent prediction?"

To sum up our discussion, RMSE is a good measure to use if we desire to gauge the standard deviation σ of a typical observed value from our model'southward prediction, bold that our observed data can be decomposed every bit:

The random noise here could be anything that our model does non capture (east.g., unknown variables that might influence the observed values). If the noise is pocket-sized, as estimated by RMSE, this mostly means our model is good at predicting our observed data, and if RMSE is large, this more often than not means our model is failing to account for important features underlying our data.

RMSE in Data Scientific discipline: Subtleties of Using RMSE

In data scientific discipline, RMSE has a double purpose:

- To serve as a heuristic for training models

- To evaluate trained models for usefulness / accuracy

This raises an important question: What does it hateful for RMSE to exist "small"?

We should annotation first and foremost that "small" will depend on our choice of units, and on the specific application we are hoping for. 100 inches is a big error in a building design, simply 100 nanometers is not. On the other paw, 100 nanometers is a small fault in fabricating an ice cube tray, just maybe a large fault in fabricating an integrated circuit.

For training models, it doesn't really affair what units we are using, since all we care about during grooming is having a heuristic to help u.s. decrease the fault with each iteration. Nosotros care only virtually relative size of the fault from one step to the next, non the absolute size of the error.

But in evaluating trained models in data science for usefulness / accuracy , we do care about units, because nosotros aren't just trying to run into if we're doing better than last time: nosotros want to know if our model tin can actually help us solve a practical trouble. The subtlety here is that evaluating whether RMSE is sufficiently pocket-sized or non will depend on how authentic we demand our model to be for our given application. There is never going to be a mathematical formula for this, considering it depends on things similar man intentions ("What are you lot intending to do with this model?"), risk aversion ("How much harm would exist caused exist if this model made a bad prediction?"), etc.

As well units, at that place is some other consideration too: "small" as well needs to be measured relative to the type of model being used, the number of data points, and the history of training the model went through before you evaluated information technology for accuracy. At outset this may audio counter-intuitive, merely not when y'all remember the problem of over-plumbing equipment.

At that place is a gamble of over-fitting whenever the number of parameters in your model is large relative to the number of data points you have. For example, if we are trying to predict one real quantity y as a role of another real quantity x, and our observations are (xᵢ, yᵢ) with x₁ < 10₂ < x₃ … , a general interpolation theorem tells the states at that place is some polynomial f(ten) of caste at most n+one with f(xᵢ) = yᵢ for i = 1, … , n. This means if we chose our model to be a degree n+i polynomial, by tweaking the parameters of our model (the coefficients of the polynomial), we would be able to bring RMSE all the way down to 0. This is true regardless of what our y values are. In this instance RMSE isn't actually telling usa anything about the accuracy of our underlying model: nosotros were guaranteed to exist able to tweak parameters to become RMSE = 0 as measured measured on our existing data points regardless of whether in that location is any relationship between the two real quantities at all.

Only information technology's not only when the number of parameters exceeds the number of data points that nosotros might run into issues. Fifty-fifty if we don't have an absurdly excessive amount of parameters, it may exist that general mathematical principles together with balmy groundwork assumptions on our data guarantee us with a loftier probability that by tweaking the parameters in our model, we tin bring the RMSE below a sure threshold. If we are in such a situation, so RMSE existence below this threshold may not say anything meaningful about our model's predictive power.

If we wanted to recollect like a statistician, the question we would exist asking is not "Is the RMSE of our trained model small?" but rather, "What is the probability the RMSE of our trained model on such-and-such prepare of observations would be this small by random chance?"

These kinds of questions get a bit complicated (you really accept to practise statistics), but hopefully y'all get the picture show of why there is no predetermined threshold for "small enough RMSE", every bit easy as that would make our lives.

Source: https://towardsdatascience.com/what-does-rmse-really-mean-806b65f2e48e

Posted by: richardsonreepris1964.blogspot.com

0 Response to "What Does It Mean When My 2011 Armada /can't Change Gears"

Post a Comment